Global video consumption has never been higher. From streaming platforms like Netflix, Hulu, Apple TV+, and YouTube to enterprise training systems, online classes, webinars, and product explainers—video is now the world’s most consumed content format. But as audiences become geographically diverse, content creators face a crucial responsibility:

To create video content that is comprehensible across languages, regions, and varying accessibility needs.

This is where multilingual subtitling and subtitling translation come in. Subtitles help audiences follow along even if they do not understand the original audio language. And with AI revolutionizing the video production industry, multilingual subtitling now takes minutes instead of weeks.

However, while AI-powered subtitling dramatically improves speed and scalability, it also introduces challenges—from synchronization errors and mistranslations to platform compatibility issues, regulatory restrictions, and workflow bottlenecks.

This blog explores how AI makes multilingual subtitling easier, the technical challenges industries face, and the solutions available today—especially for entertainment, education, and business content.

What Is Subtitling?

Subtitling is the process of displaying text on screen to represent spoken dialogue and relevant audio elements. Subtitles may be:

- Transcribed in the same language as the audio (e.g., English subtitles for English speakers)

- Translated into another language to localize content for global audiences (subtitling translation)

Subtitles differ from captions, which also include descriptions like [laughter] or [door closes]—useful for viewers who are deaf or hard of hearing.

To learn how professional teams handle scalable subtitle creation for global audiences, you can explore our professional subtitling services, which ensure accuracy, formatting consistency, and platform readiness.

Subtitle vs Caption: A Quick Difference

| Feature | Subtitles | Captions |

| Purpose | Translate or display speech for viewers who can hear | Help viewers who cannot hear follow all audio content |

| Includes Non-speech Audio | No | Yes |

| Primary Use Cases | Movies, YouTube videos, streaming platforms | Accessibility, corporate training, online learning |

Therefore:

- If someone wants translated dialogue in Spanish for an English movie → Subtitles

- If someone is hearing impaired and needs every audio detail described, → Captions

Both play critical roles in accessibility and audience engagement, especially on global platforms.

Why AI Is Transforming Multilingual Subtitling

AI dramatically speeds up processes that once required manual work hours:

- Automated speech recognition (ASR) for instant transcription

- Translation engines generate multilingual subtitle tracks

- Machine learning models reduce human labor and production cycles

- Massive scalability for OTT platforms, agencies, and content publishers

But despite the advantages, AI subtitling is not perfect—and understanding the challenges is crucial for quality execution.

Benefits of AI Subtitling

| Benefit | Impact |

| Speed | Hours instead of days |

| Scalability | Translate to 20+ languages at once |

| Lower Cost | Reduced manual processing |

| Rapid market expansion | OTT platforms can release content globally faster |

However, speed is only half the story. AI-powered subtitling also has limitations—especially where creative meaning, tone, humor, emotional context, and cultural relevance are important.

Businesses looking to automate large-scale media localization can benefit from expert-led video subtitling services that blend AI automation with human linguistic quality.

Common Challenges in AI-Generated Subtitles (and Solutions)

1. Technical & Synchronization Issues

One of the most common problems in automated subtitle creation is timing.

Issues such as subtitle synchronization issues, AI subtitle timing errors, and out-of-sync subtitles arise due to:

- Frame rate mismatches

- Platform-specific subtitle formatting

- Auto-generated transcription delays

- Post-editing or re-cutting video files after subtitles are appliedCreators often notice growing subtitle drift over time or sudden video caption delay if the format changes.

Other causes include:

- Rendering engine delays

- Auto-transcription lag

- Subtitle formats not matching platform requirements

- Editing clips after subtitles have already been applied

For example, If a video’s frame rate changes from 23.98 fps to 25 fps during editing, subtitles that were perfectly aligned can slowly begin drifting. Over a 40-minute video, this can create a mismatch of several seconds.

Solution

- Standardize video frame rates before processing

- Use subtitle formats compatible with the destination platform (e.g., SRT, VTT, TTML)

- Apply human review to ensure subtitle placement feels natural

- Use QA workflows that correct the delay in bulk

Enterprise localization systems today increasingly automate alignment checks, but human validation remains critical for entertainment releases, trailers, advertising, and streaming media.

2. Quality and Accuracy Problems

AI subtitling tools can produce grammatically correct but contextually inaccurate translations.

Common issues include:

- Literal subtitle translation problems

- Incorrect phrasing with complex grammar

- Cultural references misunderstood

- Idioms translated incorrectly (e.g., “break a leg” → literal versions)

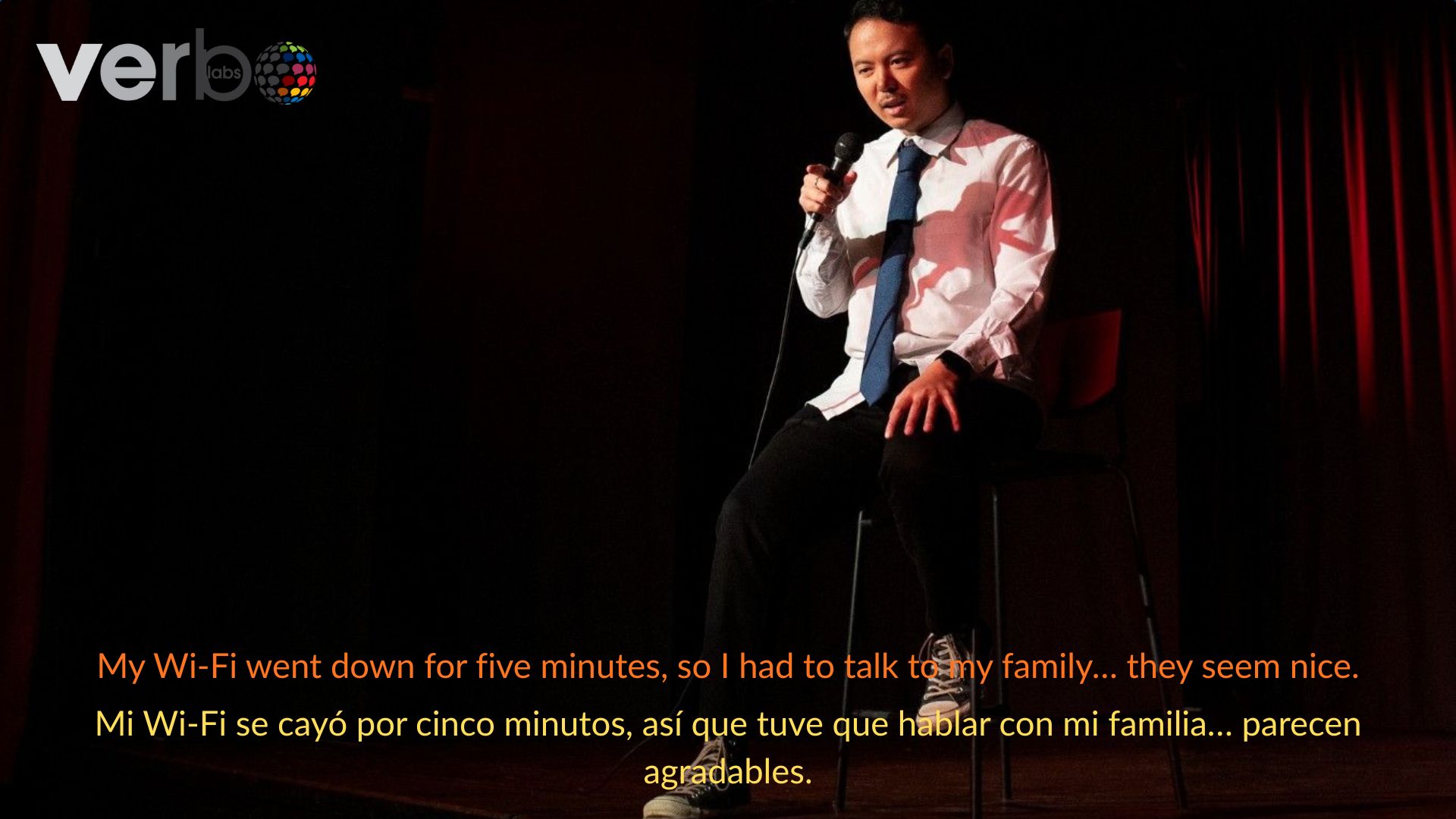

- Sarcasm, humor, subtext, or character emotion lost

For instance, idioms such as “break a leg” may get translated literally, creating embarrassing errors in films or web series.

A small mistranslation may cause:

- Humorous misunderstandings

- Legal misunderstandings in compliance or healthcare content

- Poor user experience in e-learning

In high-stakes industries—medical, aviation, law, defense—accuracy errors can be unacceptable.

Solution

- Combine AI translation with human linguistic editing

- Build custom glossaries for movie, game, or domain-specific terminology

- Apply quality assurance rules against mistranslations

- Enrich AI with datasets trained on real industry-specific content

A hybrid AI-plus-linguist workflow ensures that even subtle meaning stays intact across markets.

Using a structured subtitle quality assurance workflow ensures that timing drift, formatting errors, and translation inaccuracies are caught before publishing.

3. Challenges in Real-Time Subtitling

Live streaming, news broadcasts, panels, and webinars demand near-instant subtitling.

AI must:

- Detect speech

- Convert to text

- Translate

- Display on-screen

All in seconds

This becomes extremely difficult when:

- Speakers talk over each other

- Background noise is high

- Heavy accents reduce ASR accuracy

This leads to challenges like:

- Real-time transcription latency

- Live caption delay issues

- AI speech recognition lag

- Reduced live event caption accuracy

Solution

- Use enhanced noise-cancellation trained ASR models

- Add predictive grammatical correction layers

- Allow human moderators to edit the live output stream

Many organizations now combine automated live subtitles with post-event human review for on-demand replays.

4. Localization Delays & Workflow Bottlenecks

Even with automation, many teams still face:

- Subtitling turnaround delay

- Translation workflow bottlenecks

- Multi-language production queues

- Version control confusion when deadlines shift

This is especially true for OTT platforms and training departments that publish a lot of videos. For streaming companies releasing new episodes every week in more than 10 languages, delays in subtitling can lead to huge losses and even cost millions.

Solution

- Centralized subtitle management platforms

- Automated file lifecycle tracking

- Cloud-based editing with real-time project ownership

- Using global resource pools to scale editing based on demand

This reduces localization project delays and optimizes time-to-publish.

5. Accessibility & Compliance Issues

Many businesses must follow standards such as:

- ADA (Accessibility law in the U.S.)

- WCAG (Web Content Accessibility Guidelines)

- Local broadcasting regulations

Problems occur when:

- LMS portals restrict subtitle uploads

- Default video players don’t support multilingual closed captions

- Subtitle formatting doesn’t meet platform rules

This creates challenges such as:

- Video accessibility subtitles

- Closed caption regulations

- WCAG video compliance

- Multilingual accessibility subtitles

Solution

- Use subtitle output formats compatible with target LMS and CMS systems

- Ensure transcripts and captions meet accessibility criteria

- Use globally accepted formats (SRT, VTT)

- Provide alternative text transcripts for visually impaired audiences

Companies that take accessibility seriously not only follow compliance but also earn better engagement and longer session times.

6. Platform-Specific Subtitling Problems

Each major platform poses unique constraints:

1. YouTube

- Auto-generated subtitles average only 60–70% accuracy

- Struggle with accents, slang, and technical content

Users commonly search for:

- YouTube auto-caption accuracy

2. Netflix

Creators may look up:

- Requires extremely high subtitling quality, including reading speed limits, character-per-line rules, and strict terminology consistency

- People often search:

- Netflix subtitle translation issues

Since quality expectations are extremely high for global content releases.

3. Hulu

Users have reported:

- Hulu subtitles are out of sync

4. Apple TV

Frequent reports describe:

- Apple TV subtitle delay

Professional subtitling companies test subtitles across platforms to prevent playback errors once content goes live.

5. Learning Management System (LMS Platforms)

Issues like:

- Canvas LMS subtitle upload fails

- Moodle captions problems

- Some systems don’t allow multiple subtitle streams

Solution

- Use professional QA and subtitle verification

- Deliver platform-optimized subtitle formats

- Apply performance checks during integration

How AI Subtitling Benefits Industries

AI multilingual subtitling helps industries create accurate, scalable subtitles quickly. It boosts engagement, accessibility, and global reach across entertainment, education, and business.

1. Film & Entertainment

The entertainment industry gains massively from AI subtitling:

- Faster subtitle production for international rollouts

- Cost-effective localization at scale

- Audience engagement improves instantly

- Multiple markets reached with the same content investment

For example, A streaming platform can produce 10 languages of subtitles for a 45-minute episode in hours instead of days, with human QA ensuring narrative integrity.

2. Education & E-Learning

- Higher comprehension and learning retention

- Students with disabilities get equal access

- Global institutions can deliver content to multilingual classrooms

- Subtitles improve LMS course completion rates

Studies show that students watching videos with subtitles retain significantly more information compared to students watching without subtitles.

3. Businesses & Enterprises

- Better training efficiency in multinational teams

- Higher employee knowledge retention in onboarding and certification videos

- More accessible corporate communication

- Subtitled content can be translated repeatedly with minimal effort

Where VerboLabs Helps

AI solves speed and scalability—but quality, contextual accuracy, cultural alignment, and synchronization still need expert oversight. VerboLabs bridges this gap by combining automation with industry-leading human review.

With Verbolabs:

- 120+ language support (Spanning Asian, European, Middle Eastern, African, and Latin American markets.)

- Professional subtitle translation and QA

- Context-aware localization for culturally accurate messaging (No mistranslations, Idioms make sense, Emotional meaning remains intact, Cultural alignment is respected)

- Fast turnaround for OTT, e-learning, marketing, entertainment, and corporate content

- Expert handling of synchronization, platform formatting, timing drift, and accessibility compliance

VerboLabs ensures subtitles are not just technically correct—but meaningful, smooth to read, and impactful across global markets.

If you want AI speed with human reliability, VerboLabs delivers both at scale.

| AI Challenge | How VerboLabs Solves It |

| Subtitle timing drift | Timecode audits and correction |

| Incorrect translations | Human linguist review |

| LMS subtitle failures | Platform testing before publishing |

| Inaccurate ASR | Domain-trained models + manual editing |

| Inconsistency across versions | Centralized project workflows |

You can explore real-world subtitling success stories to see how global clients have scaled multilingual content across platforms. Link

Conclusion

Multilingual subtitling has become critical in today’s global video ecosystem, helping businesses, educators, and entertainment platforms reach wider audiences with clarity and accessibility. While AI has dramatically sped up subtitle creation, challenges like synchronization issues, literal translations, platform inconsistencies, and accessibility gaps still require expert human oversight.

By addressing AI multilingual subtitling challenges and solutions through a blend of automation and professional linguistic review, organizations can achieve accurate, culturally appropriate subtitles that enhance content quality and viewer experience.

VerboLabs helps bridge this gap with support for 120+ languages, rigorous QA workflows, and platform-ready subtitling tailored for OTT, corporate learning, and global media releases. From improving timing accuracy to ensuring regulatory compliance and contextual translation, VerboLabs delivers subtitles that are fast, reliable, and ready for worldwide audiences.

Improve results with expert support for AI multilingual subtitling challenges and solutions. Get fast, accurate subtitles at scale with VerboLabs.

FAQs

Subtitling is the process of adding on-screen text to represent spoken dialogue in a video. It may be in the same language or translated into another language for international audiences.

Subtitling translation converts spoken dialogue from the original language to another language while considering timing, readability, and context.

Subtitles translate or display speech, while captions also include sound effects and non-speech audio cues for accessibility.

Common causes include frame rate mismatches, delayed processing, and platform rendering limitations. Professional QA and platform-specific formatting help correct this.

AI is fast but can misinterpret idioms, slang, accents, and noisy audio. Best results come from blending AI automation with human quality review.

YouTube relies on automated speech recognition, which struggles with fast speakers, complex terminology, or background noise.

Use closed-caption formats that follow accessibility standards and verify compatibility with the target platforms or LMS systems before publishing.